VIBEYES

Design project for deafblind

The VIBEYES was a semester project in the Design School Offenbach (Hfg-Offenbach), which tried to provide a sollution for the communication of the special group in our society, the deafblind, by cutting edge technologies.

Projrct type /

Semester project

Role /

Product designer

Agency /

Hfg-Offenbach

Year /

2019

Research & Froschung

Experimental psychologists Red Rhett Pull(Treicher) had two famous psychological experiments, one is about human to obtain information on ways in which the main. He through the many experiments: human getting information from visual 83%, 11% from hearing, and 3.5% from smell, 1.5% from touch, 1% from taste

We visited the nursing institute in Fulda and talked with the workers about the live of deafblind

photo by Yudan Chen

Problem & Solution

Two problems from observation.

Firstly the direction of communication is lost by the deafblind. The relative direction of conversation partner is important to a conversation, without the direction, the communication will be meaningless

Secondly the emotional exchange is important to a conversation. People use the facial expressions and crooked movements to exchange the emotions. For the deafblind, the channel of emotion exchange is with the loss of visual and acoustic senses totally unusable.

The aim of the project is to rebuild the channel with help of technologies

1. The project VIBEYES is an improvement plugin for the daily communication of the deafblind. In the normal scenario, the deafblind shoud always with an interpreter to translate the tactal language into oral language or reverse.

2. And for the social siginal, the deafblind have no abilities to receive

3. WIth computer vision and the recognition algorithm, the VIBEYES can capture the emotional signals and translate into a vibration signal to transfer the information to the user.

4. The matrix makes transfer of different information possible and in addition, the system can also show the information according to die direction of the source of the signal. So that help the user to build up the conversation successfully

5. With help of the emotional information the deafblind can react as normal as the others.

Machine Learning & Emotion Recognition

With help of Python facial recognition and computer vision, the prototype was built up and tested. The program combined camera and vibration servo motors by data transfer, so that the vibration can be controlled by the emotions captured by the camera. And the picture below show the recognition of 6 basic emotions

The connection between Python and other software is also possible. The video below shows us the connection with Grasshopper. There is a possibility that the transformation of the object is controled by the facial expressions.

Concept & Development

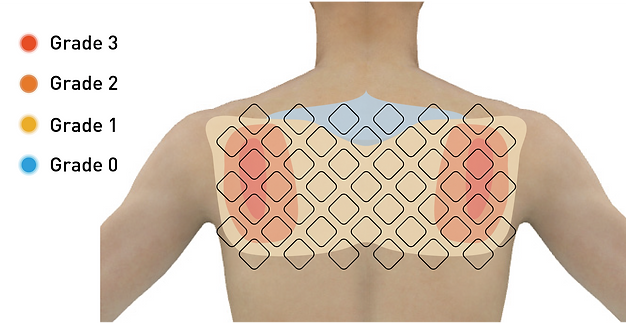

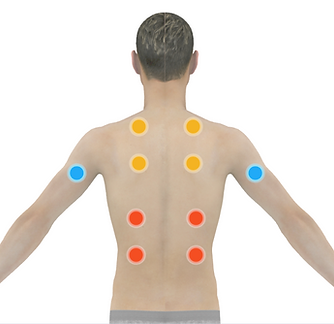

The sensitivities test aimed to find out the ideal area for the vibration on the surface of humanbody. The points shows different sensitivity grades from 0 to 3, which means almost no feeling to very sensitive. As the result, the back of oberbody is a ideal area for the vibration.

Concept & Pattern Language

Based on the theory of Robert Plutchik, the emotions were defined as 8 different basic emotions, and as they superimpoes with each other become the rest of the other emotions. Here in this project only 6 basic emotions were selected to show how this system works.

The charactor of the emotional express can be defined in this polygon matrix as the graphic showed below. Based on the research of the sensitivities, the emotions were defined in 6 non interfering models, each emotion can be clearly feel by the vibration.

Prototyping & Pattern Language

The Vibreation pattern was transfered into a control matrix in arduino IDE, with the conncetion between python program and the arduino hardware, the prototype runed successfully

Design & Details